Text from the ChatGPT page of the OpenAI website is shown in this photo, in New York, Feb. 2, 2023. (AP Photo/Richard Drew)

Two personal injury lawyers were fined $5,000 Thursday for using fake cases and citations generated by ChatGPT in court documents and then lying about it in open court. The Manhattan judge who imposed the fine left lawyers Steven A. Schwartz and Peter LoDuca to their consciences to decide whether the phony filing warranted personal apologies to the judges.

Schwartz submitted an affidavit to the court explaining that he had used the artificial intelligence program ChatGPT to “supplement the legal research” while drafting the documents.

LoDuca, the attorney of record in the case, signed a brief filed with the court that contained citations to cases that did not exist. Schwartz, the attorney with three decades of experience who drafted the document, did not sign the filing because he is not admitted to practice in federal court.

Schwartz later told the judge that the program now “has revealed itself to be unreliable.” As it turns out, though, Schwartz had been skeptical about the reliability of the AI-generated caselaw, but instead of researching the matter himself, he turned once again to ChatGPT to inquire about whether the case was real.

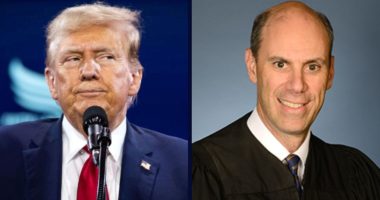

“Many harms flow from the submission of fake opinions,” wrote George W. Bush appointee U.S. District Judge Kevin Castel.

Castel listed a litany of “mischief” that could result from even an innocent mistake:

The opposing party wastes time and money in exposing the deception. The Court’s time is taken from other important endeavors. The client may be deprived of arguments based on authentic judicial precedents. There is potential harm to the reputation of judges and courts whose names are falsely invoked as authors of the bogus opinions and to the reputation of a party attributed with fictional conduct. It promotes cynicism about the legal profession and the American judicial system. And a future litigant may be tempted to defy a judicial ruling by disingenuously claiming doubt about its authenticity.

The lawyers, in this case, however, had not been so innocent, according to Castel. According to the judge’s findings, the attorneys “doubled down” on the fake cases cited, then “did not begin to dribble out the truth” until much later — after the court had already put sanctions on the table.

To make matters worse, as the ChatGPT saga began to unfold via court filings, LoDuca submitted a document indicating that he would be on vacation and could not respond promptly regarding the veracity of the citations. That statement, too, turned out to be false.

Castel summarized his finding:

Mr. LoDuca’s statement was false and he knew it to be false at the time he made the statement. Under questioning by the Court at the sanctions hearing, Mr. LoDuca admitted that he was not out of the office on vacation.

Later, LoDuca admitted that he made the statement about a vacation to cover for Schwartz, who had been out of the office and needed additional time to prepare for the upcoming hearing about the fake citations.

Read Related Also: Flagler and Palm Coast July 4 Holiday Schedules, Travel and Safety Advisories

“The lie had the intended effect of concealing Mr. Schwartz’s role in preparing the March 1 Affirmation and the April 25 Affidavit and concealing Mr. LoDuca’s lack of meaningful role in confirming the truth of the statements in his affidavit. This is evidence of the subjective bad faith of Mr. LoDuca.

The judge also looked closely at the cases ChatGPT generated for the two attorneys — and found them significantly lacking. One case showed “stylistic and reasoning flaws” that would not normally be in an appellate decision.

“Its legal analysis is gibberish,” said Castel.

Castel also took issue with Schwartz’s attempt to downplay the role ChatGPT played in his legal research. The judge included the following colloquy in his sanctions order:

THE COURT: But ChatGPT was not supplementing your research. It was your research, correct?

MR. SCHWARTZ: Correct. It became my last resort. So I guess that’s correct.

Castel wrote that the characterization of ChatGPT as a supplement was “a misleading attempt to mitigate his actions by creating the false impression that he had done other, meaningful research on the issue and did not rely exclusively on an AI chatbot, when, in truth and in fact, it was the only source of his substantive arguments.”

The judge also called out Schwartz’s skepticism about ChatGPT’s case law. Court documents included screenshots from a smartphone in which Schwartz asked ChatGPT, “Is Varghese a real case” and “Are the other cases you provided fake?”

When ChatGPT responded that it had supplied “real” authorities that could be found through Westlaw, LexisNexis and the Federal Reporter.

Finding that the lawyers’ conduct had been in “bad faith,” Castel imposed sanctions under Rule 11 on the attorneys. Per Castel’s order, the attorneys must pay $5,000 to the court for their wrongdoing.

Castel ordered that the lawyer must inform their client and any judges whose names were wrongfully invoked in the filings. However, Castel declined to order an apology, writing, “The Court will not require an apology from Respondents because a compelled apology is not a sincere apology. Any decision to apologize is left to Respondents.”

You can read the full order on sanctions here.

Have a tip we should know? [email protected]